How to develop your evaluation plan

There are 4 key steps to evaluation:

- Developing a logic model

- Collecting evidence

- Analysing and reporting

- Acting on your learning

In the following section we shall look at each of these in turn.

Developing a logic model

Once you establish what your desired outcomes are (see section 4.2 above), you can begin setting out how these will be achieved. Developing a Logic Model will allow you to map out the links between your aims, activities and outcomes.

A logic model helps to:

- Clarify short-, medium- and long-term outcomes.

- Design and plan a new project.

- Consider the need for your project and what you will do to address that need. It can be helpful to think about what needs to change.

- Identify project or programme risks and how you might manage them.

- Communicate your thinking to people who support or benefit from your workIt can provide a pathway or road map for measuring progress and identifying evaluation priorities.

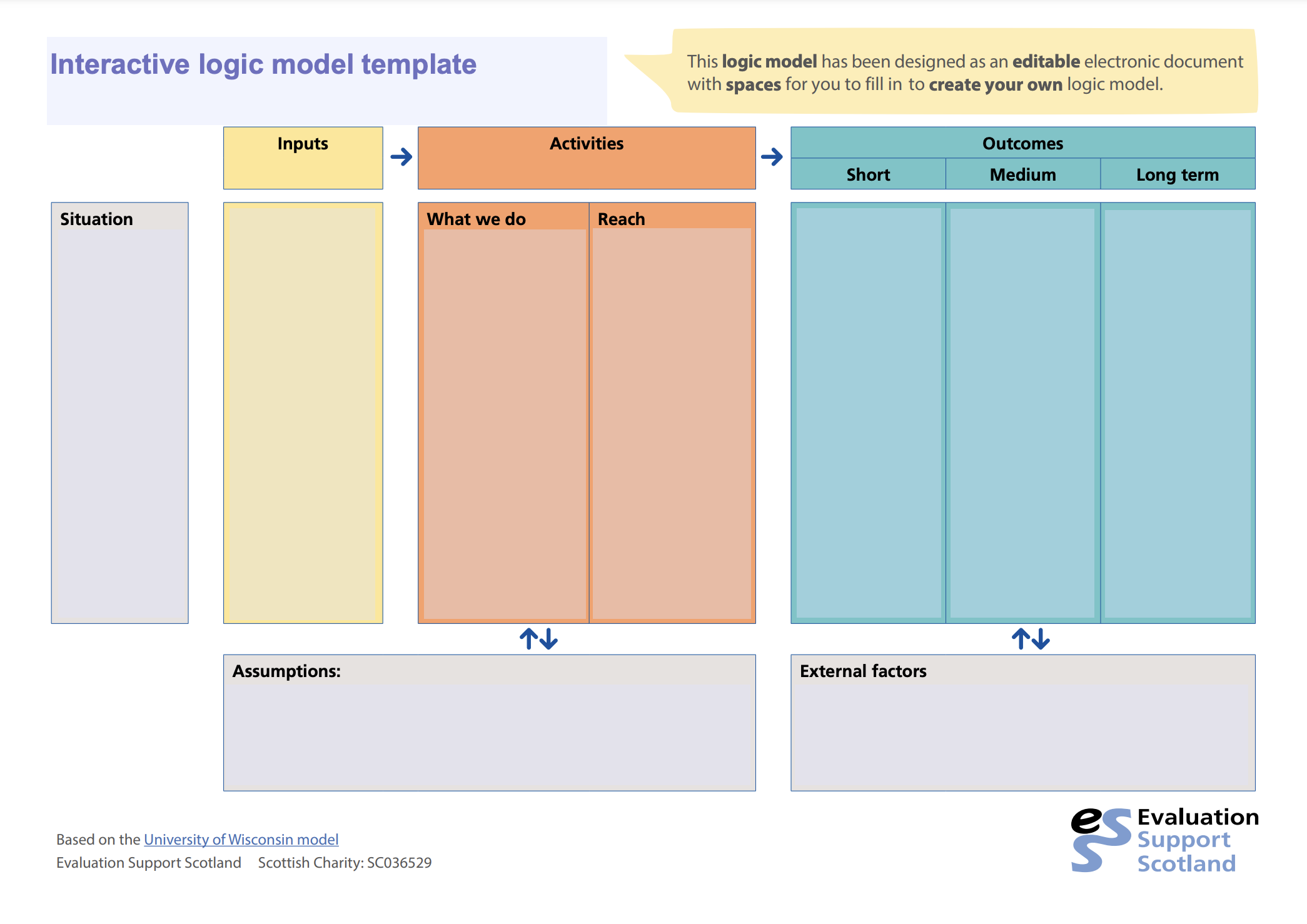

NB: This step can take a bit of time and it is worthwhile using the perspectives of a range of people across your organisation and beyond to strengthen and challenge your thinking. We shall use this helpful template created by Evaluation Support Scotland to show how to develop a logic model:

To help your thinking, consider the following questions as you complete each section of the template:

Situation or Need

- Have we defined the need correctly?

- What is the problem or issue and for whom?

- How do we know?

- Why is this a problem?

- Who cares if it is resolved?

- Who else is helping to resolve this issue and how do we fit in?

- What do we know about the factors causing this issue (from research or experience)?

Resources

- What resources do we need or are we using? (staff, volunteers, supervision, equipment, technology, money, buildings)

- Do we have enough resources to deliver the activities?

Activities and Participation

- What are we doing / do we need to do?

- Who are we reaching or targeting?

Outcomes

- What change do we expect as a result of these activities?

- Is it logical that if we deliver these activities it will lead to these outcomes?

- What will happen immediately (short-term outcomes), what is the longer-term change (long-term outcomes) and what will happen along the way (medium-term outcomes)?

- What is a typical journey for our beneficiaries or service users?

Assumptions

At this stage it is worth taking a step back and critically evaluating your logic model. Ask others for their input and feedback. Evaluation Support Scotland have created a series of simple questions to help your thinking at this stage:

- Is it meaningful/ worth doing?

You may have accurately identified a problem, but other people might be more concerned about other problems. Ask yourself ‘who cares and why?’

- Is it plausible?

Can those activities really deliver those outcomes? Are you setting yourself up for failure by promising too much? Do you understand how you bring about change in the short and long term? Can the outcomes be sustained?

- Is it doable?

Do you have enough resources to deliver your activities? Are your activities practical as well as desirable? Do you have enough commitment from relevant partners?

- Is it testable?

Will you be able to tell if things are progressing (or not)? Will you be able to convince others?

External Factors

This is helpful in terms of setting your work in a broader context and assessing what other factors may affect your work. It is similar to undertaking a risk analysis.

Consider:

- What changing factors might help or hinder your work with, or impact on, the people or communities you want to benefit? (Political, economic, environmental, demographic, technological, legal). Can you do anything about these factors?

- What agencies can support or threaten your work?

Further Reading

Collecting Evidence

When designing methods to collect and measure your outcomes you need to consider a number of factors.

These include:

- The characteristics and abilities of the people you work with eg age, literacy levels, language, culture, disability

- The availability of the people you are seeking evidence from eg internet access, available time

- The nature and environment of your work, eg if you are running individual counselling sessions for survivors of abuse it wouldn’t be appropriate to seek feedback in a group setting.

- Who else might witness the outcomes you are seeking? Perhaps a family member.

- What methods of capturing information are already in place? Can you avoid duplicating effort?

In essence, you want to collect the most useful evidence whilst keeping the evidence collection as easy as possible. Gathering evidence from a mix of sources will increase the strength of your case.

It is important to note that a number of sophisticated and validated tools have been developed to measure outcomes of wellbeing activities and counselling eg CORE. These tools allow you to collect meaningful evidence of the clinical impact of your interventions. The use and analysis of these specific tools are discussed in more depth in Section 5.

Further Reading

Evaluation Support Scotland have produced a useful guide to Designing Evidence Collection Methods

Mini guides to different collection methods are also available

Analysing and Reporting

By now you will have collected a range of information from different sources on your progress against your outcomes. The next step is to piece these together and analyse the results and report your conclusions on what has happened and why.

Further reading on analysing information

Evaluation Support Scotland have produced a guide to support analysing evaluation

Further Reading on Reporting

Acting on your learning

Don’t forget this important step.

You can use what you have learnt to:

- get better at what you do –e.g. improve services or motivate your staff

- involve and engage service users in your work e.g. using their feedback to encourage new referrals or co-develop new services

- get more funding e.g expand a service into new areas or build sustainability. NB Funders expect that evaluations will include areas which can be improved. Incorporation of these learnings can be more important than the evaluation outcomes being fully achieved.

- lobby for change – in government policy or local authority practice. Statistics and case studies can be powerful agents of change and tell the story of your service or the need you are addressing.

- improve wider understanding of what works and why. This is important in the area of support for survivors where a wide range of support and counselling is available. Building our understanding of what works benefits everyone.

Questions to consider as you consider how best to implement your learning:

- Who do you need to communicate your learning to?

- What difference do you want using or communicating your learning to make?

- What are the forces helping or hindering you succeeding in using the learning?

- Who needs to be on board?

- What do you need to do to use or apply the learning?

- What are the logical steps involved in getting you there?

- What are the implications for staff, resources, systems and processes?

- How will you know you have succeeded?

- What will you do next?

Further reading

Evaluation Support Scotland Guide to Using what you learn from Evaluation

Previous

How to get started with evaluating your service for survivors

Next

Further reading